I’m not enjoying ROI-Guru, Jack Phillips’ latest book, ‘The Value Of Innovation’, but I’m sticking with it. Business books are a bit like conferences for me these days. In that, for the most part, they exist to make me angry. And if I get angry enough, once the first wave of quiet seething is over, it can become a trigger for some kind of insight.

One of the reasons I’m having to stick with the book is because we’re getting lots of ‘can you help me measure this’ type innovation work at the moment. And, in classic, ‘someone somewhere already solved your problem’ fashion, Phillips is purportedly the go-to guy when it comes to ROI. He’s the one that already solved your problem. Except, apart from stating several times that everything is measurable, only very rarely does his book justify the statement with any evidence.

This problem is particularly acute when it comes to intangibles. Phillips admits they are important, but that’s as far as his story goes. In the end, he declares that the very definition of an intangible is something that is ‘unquantifiable’ and therefore something that won’t be included in any ROI calculation. Which sounds like the ultimate cop-out to me. Except, I guess that Phillips’ glib statement will have something to do with the fact that his primary audience is other finance-type people. Who, as far as my experience goes, not only don’t understand intangible, but actively don’t want to understand intangibles.

As I’m gritting my teeth reading the book, I’m also noticing that Phillips has no comprehension of complexity and complex systems. And, perhaps less surprising, he also has no conception that the level of Innovation Capability of an enterprise also has to have an impact on ROI calculations. I’ve said a little bit about that latter topic in the last two issues of the SI ezine. It’s the complex systems topic that I wanted to explore a bit here.

The straw that broke this camel’s back was a mini-case study concerning Wal-Mart, and Phillips’ praise for the not-so-bright spark who’d calculated the ‘cost’ of every minute a delivery truck was at the unloading bay waiting to be unloaded. I have a feeling this kind of mis-guided thinking is why so many employees are actively dis-engaged from their work and trust in corporations is at a historic low. Everybody is on the clock. The drivers get stressed. The truck unloaders get stressed. Job satisfaction takes a downward turn. Employees feel out of control. They feel ‘its not fair’ when things go wrong that have nothing to do with them.

So then what happens? When we get treated unfairly, we compensate. We sneak an extra break when the boss isn’t looking. Or we ‘borrow’ some office stationery. Or we add a more expensive meal to the expenses claim. Which, when the ilicit activity eventually gets found out, means the bean-counters get angry. And when bean-counters get angry, all they want to do is dream up ever more convoluted beans to count. Which means that, as well as costing out lost minutes at the loading bay, they bring in tools to monitor breaks, put cameras in the stationery cupboard, and put checks in place to reject expense claims with an over-allowance dinner receipt on it. Which, surprise, surprise, now closes the loop and makes employees feel even less trusted and even less in control. All the time this toilet-swirl of distrust is going on, the bean-counters are rubbing their hands with glee because their figures are getting better because things like trust and control are ‘intangible’ and therefore aren’t on the balance sheet. The bean-counters, in most cases, only even begin to realise there’s a problem when very tangible things like increased sick-rates and staff-turnover start to show up on their dashboards. By which time, sadly, the vicious cycle has turned into a tailspin and the game is just about over.

The simple truth is this. Working out the ‘cost’ of waiting delivery trucks is a simple response to what is in reality a complex problem. Moreover, it is one of the thousands of other wrong simple solutions to a complex problem.

If a system includes two or more humans, it is complex. And, given that almost every measurement in existence is done by one human on another, almost every ‘measurement problem’ is complex.

And if that is the case, any measurement situation demands new rules of behaviour. None of which will be found in Jack Phillips book. First of all, there are no absolutes any more. This means there is little point in quantifying things. Far better instead to be looking out for relative changes, for ‘vectors’ and ‘rules of thumb’. None of which are popular with today’s bean-counters, granted, but that’s a lesson they’re going to have to learn. Most likely the hard way.

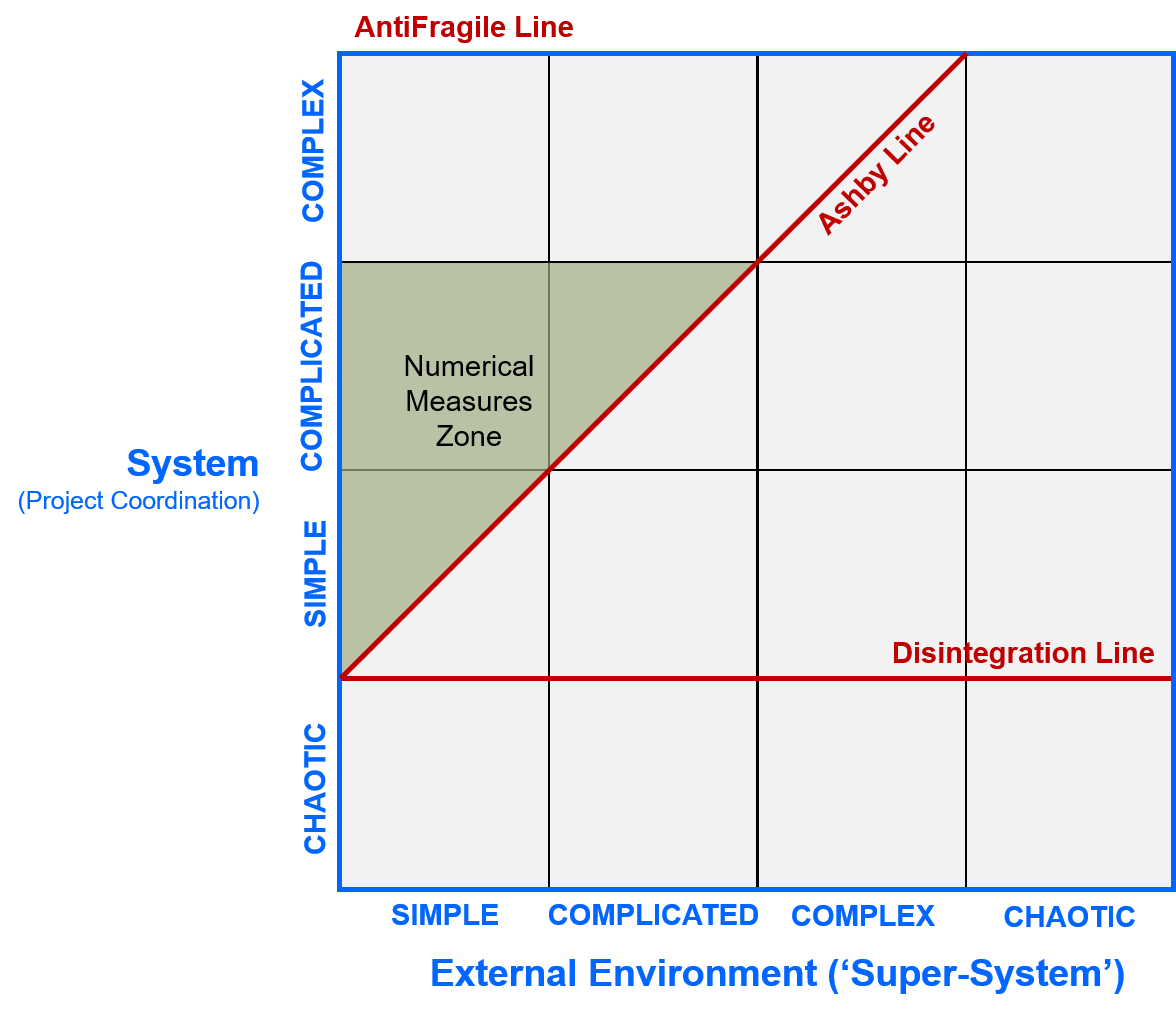

Thinking about the Complexity Landscape Model, my current thinking is this. The only sensible place to make quantitative measurements, and use the information to ‘run the business’ is here:

Numbers are for robots communicating with other robots. Robots that have been taught to operate in the ‘simple’ world: ‘do this; keep doing it forever, until I tell you to stop’. Perfect for automated processes where the aim is maximum efficiency. Robots aren’t quite to good at ‘complicated’ situations yet. They will be, because complicated problems are amenable to finding a clear and definitive ‘right’ answer. Right now, however, it is generally speaking going to be a human that is going to make such decision and such calculations. I used to design jet-engines. That’s a complicated problem, but it is also one in which it is possible to make a clear calculation that tells a designer that if they manage to redesign a component and reduce the weight of the engine by Xkg, the net worth to the Company will be $Y. Not quite so simple, obviously, but once you’re taught to take into account s-curve, ‘law-of-diminishing-return’ and other mechanistic effects, you’re pretty much good to go.

I think where I’m going with all this is to try and reach some kind of rule-of-thumb measurement heuristics. The first of which was going to be, ‘if you’re problem situation is not in the Numerical Measures Zone, quantification is pointless, and will probably lead you astray’.

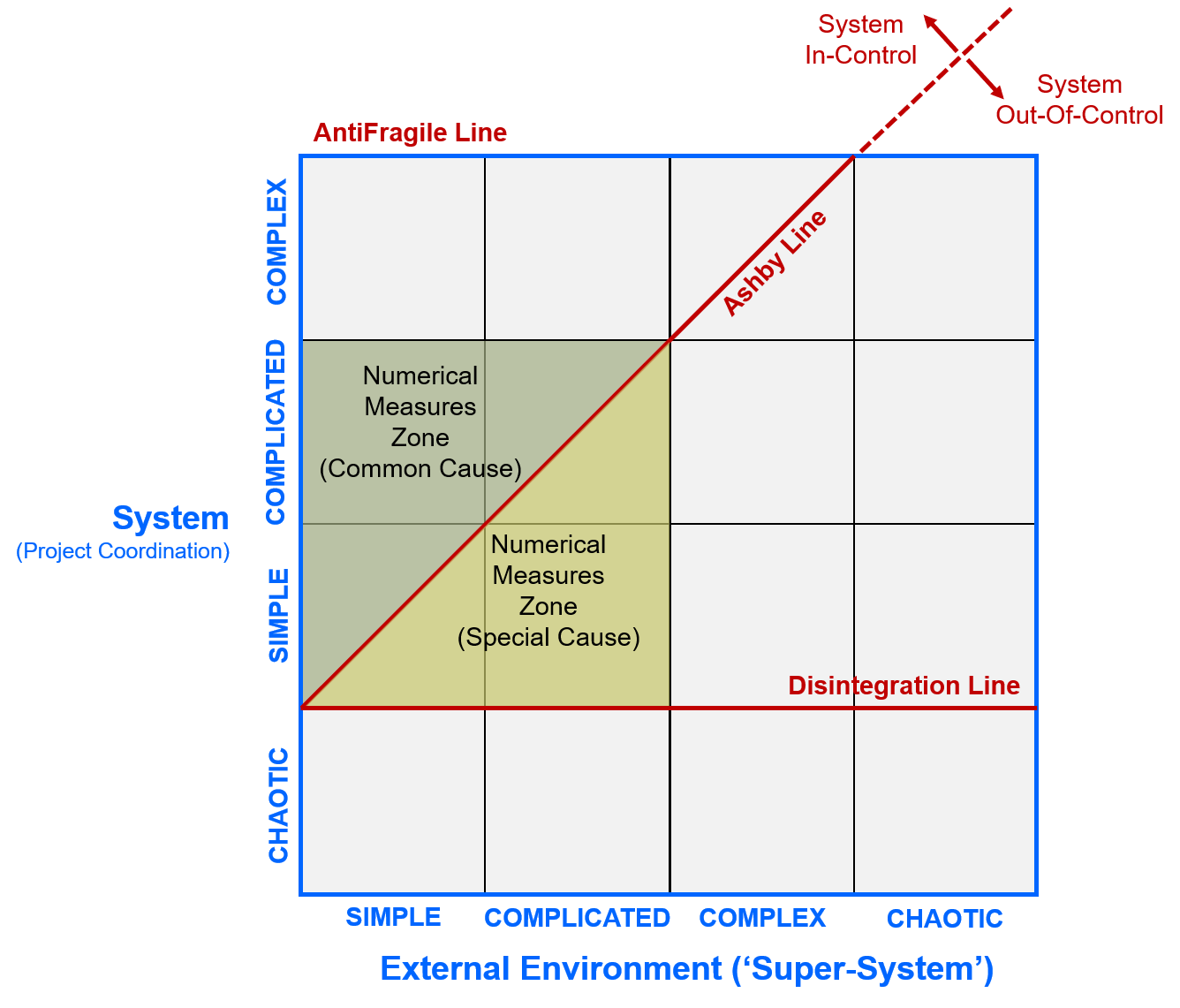

But then I thought about my old hero, W. Edwards Deming – a man who pretty much lived in the Numerical Measures Zone – and his careful division of the measurement world into ‘common’ and ‘special’ cause situations. I find the distinction has become blurry in a lot of post-Deming continuous improvement initiatives. Which is more than a shame, because, if a person mixes the two up, the only result is that they will make things worse. If a system is ‘in control’, the job of the continuous improvement team is to focus on ‘common cause’ measurements if the wish to improve the system. If the system is ‘out of control’, the job is to focus on ‘special cause’ measurements. Somewhat conveniently, this distinction between ‘in’ and ‘out’ of control maps beautifully onto the CLM like this:

So now I can really propose a new heuristic: ‘if you’re problem situation is not in either of these Numerical Measures Zones, quantification is pointless, and will definitely lead you astray’.

I think there might be more profound implications to come out of this. But first, I think I need to go test it with a few semi-friendly bean-counters…