Forgive me if you’ve heard me tell this one before. I’ve been put in the highly privileged position of designing a curriculum for a new technology degree. My specific brief is to drag the subject into the 21st Century. If that sounds like something of an oxymoron – technology/21st Century – bear with me a second or two.

Back in the late 90s, after several years of pleading, I was allowed to teach a TRIZ Module as an option to some of the final year Engineering students at the University of Bath. I’d originally asked if I could teach First Years and had been told it was ‘too dangerous’. I didn’t explore what that meant. At the time I took it to mean that the Head of Department had no idea what TRIZ was and therefore – understandably I could imagine – wasn’t prepared to take the risk. The degree programme had just hit the UK Top Five, and no-one wanted to jeopardise the growing reputation. Again, I could understand that. So, anyway, we get to the end of the optional Final Year Module and, as was the Department’s policy, the students were asked to provide feedback on the quality of the teaching and content. The consensus was basically one of outrage. Outrage of the, ‘why weren’t we taught this in the First Year’ variety. Needless to say, I wasn’t invited to run the Module again the following year. Either with the Final or the First Year’s.

This memory came starkly back to the front of my mind as I started thinking about what a 21st Century technology degree might look like. ‘Dangerous stuff’ seemed to be one of the conclusions.

Beyond that, I decided to go back and look at the rest of the Bath curriculum from the same years I was teaching there. Then I compared it with a current day version, and then the one I was taught during my degree back in the Stone Age (1981-4). To be honest, there wasn’t a lot of difference between the three. First up, there was a lot less mind-numbing derivation of formulae in the later curricula. Second up, there was an awful lot more use of software analysis tools in the 1999, and, particularly, the 2020 curricula. If a student wanted to do a stress or flow analysis back in the early 1980s, they had to write the software to do it; today, it’s all been done for you, so the student just has to build a model and then press the magic button. Beyond that, however, all the other differences were second order optimisations. Which ultimately means that, if my post-degree working experience was anything to go by, 90+% of the content would never be of any use whatsoever ten minutes after the graudation ceremony. And, moreover, the 10% that does still get used either gets used as first-principle ‘rules of thumb’. Or gets Googled. The former being a good thing – as it turns out, 1984 seems to be one of the last years that any engineer got taught anything from first principles anymore. The latter serving as a reminder that today its possible to answer almost any question by looking it up.

And, boy, is there a lot to look up these days. The technology ‘database’ has become an awful lot bigger in the past forty years. A lot more books, a lot more papers, and an awful lot more specialisation. What I’m a lot less sure about, however, is how much of this exponential increase in content is signal and how much is noise. Actually, that’s not true. When we force ourselves to go back to first principles and ask how much of the most recent content has altered or expanded our first-principle knowledge base, the answer is very stark: it hasn’t really changed at all. The signal is still pretty much the same signal. If it has grown, it has grown linearly. The noise is the thing that’s grown exponentially.

I think this is significant. Great that things can be looked-up on demand. Not so great if the technologist doesn’t have a first principle of understanding of what they’ve looked up to know whether the answers they produce make sense. It’s really useful to be able to run a finite element analysis to work out what and where maximum stresses in a structure are, but not so great if you don’t understand that stress = force/area will get you 90% of the way there. And therefore, allow you to establish whether the software is giving you something like the right answer.

That seemed to offer another clue as to what a new curriculum might do. If finding answers is easy, then surely, finding the right question to ask becomes much more important. Here again I think the technology education system has let students down badly. My main evidence for this is the fact that, particularly in the last three or four years since Generation Z has started entering the workplace, I find I can no longer set exercises to students that start with the phrase, ‘think of a problem…’ The first time I did it, it took me ten minutes to realise that everyone in the room was looking at me with an expression close to blind panic because they had no idea what a problem was.

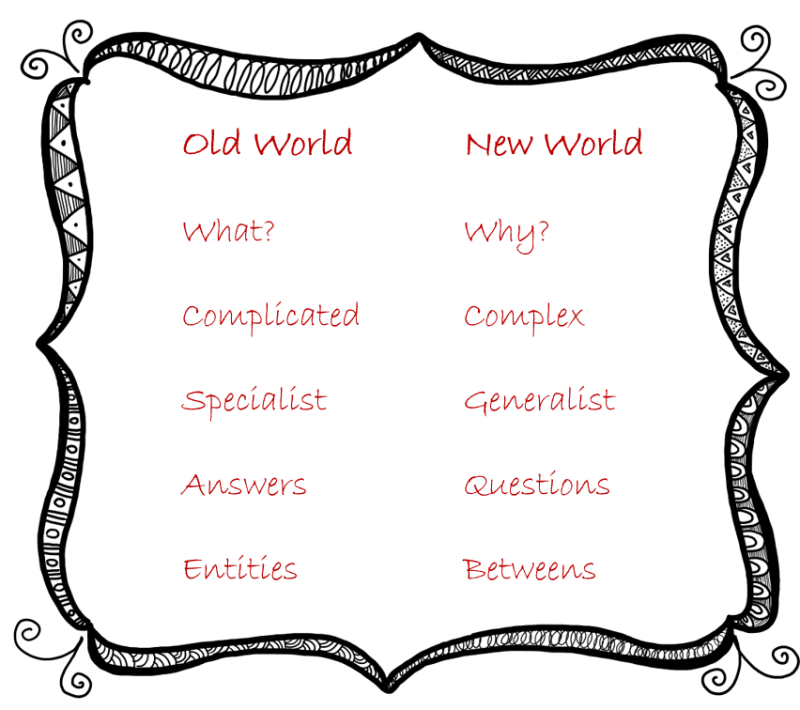

At the same time this is happening, the world is becoming more complex. Every problem is increasingly likely to be connected to every other problem. That then highlights another issue with the way technology is taught today. The complexities of inter-disciplinary, interdependent problems makes for a really bad fit in an academic world that is still taught in tight specialisations. And which, more critically, tend to inherently avoid the ‘human’ issues that can no longer be excluded from whatever challenges are being worked upon. Without wishing to delve too deeply into technology cliché-land, I think its fair to say that most technologists (‘geeks’ to use the modern parlance) become technologists as a means of avoiding as many of the complex human-relationship issues as they can. Pure technology problems (if such a thing exists any longer) have the possibility to be merely complicated. Which means there is the potential for a ‘right’ answer. Real technology problems, however, are complex. Which means there is no longer such a thing as the ‘right’ answer any more. As far as I can tell technology educators are either not aware of this shift, or, more likely, have no desire to become aware of it. As a consequence, they do their students an enormous dis-service.

Finally, let’s add one more big challenge into the technology education mix. Back to the idea of the expansion of knowledge for a second. Even if it is the case that the rate of creation of ‘new’ first-principle knowledge is slow and essentially linear, the fact that it is increasing at all inevitably means that the number of combination possibilities increases exponentially. Which means we find ourselves back in complex territory again. And another technology education shortfall: almost no curriculum I’ve seen has even started to think about – never mind solve – the issue of how to combine partial solutions from different domains.

Except, of course, TRIZ. Which, sadly, brings us back full circle. As I experienced at the University of Bath, the vast majority of the technology education community either has no knowledge of TRIZ, or has no desire to acquire said knowledge. If they did, it would offer a solution to many of the innate problems of today’s technology education dysfunction. Which I believe can be summarised as follows:

- Virtually nothing gets taught from first principles anymore, so students don’t understand the world at a first principle level. This means they often know what to do (what the computer tells them) but have no idea why. TRIZ, by asking the questions that it did – accidentally – gave the world a comprehensive database of first principles.

- Students are taught how to answer questions rather than ask them. Finding answers is a much easier job today than it was 40 years ago. Today’s technologists need to be taught how to ask better questions. TRIZ helps to do this through the discovery that technology evolution has a very clear direction, and that progress occurs through a series of definable discontinuities.

- Nearly all modern problems involve people somewhere, and hence all are complex. Meaning that teaching students how to solve only complicated problems is no longer sensible.

- The world of technology is now massively hampered by extreme specialisation. The 20th Century was the time for specialists. The 21st Century technologist needs to be a boundaryless generalist.

- The best solutions to any complex problem almost inherently emerge from a combination of partial solutions. Few if any students are taught how to explore and make sense of the potentially billions of combinations to converge on solutions that are both coherent and meaningful. Understanding how to make the right combinations means understanding how to deal with the ‘betweens’.

The new curriculum is built to address these five core problem areas. It, in summary, is about focusing on signal. By avoiding the task of teaching noise (a problem endemic in the computing world, where lecturers continually fight a losing battle against every changing languages and protocols), and instead focusing on first principles, we become able to solve a crucial contradiction: We get to teach a more comprehensive, useful and future-proof curriculum in a shorter amount of time. That’s ‘useful’ in terms of benefit to the students, benefit to their future employers, and benefit to society at large.

Here’s what the basic curriculum looks like:

Module 1: Foundations – First-Principles/Systems/S-Curves

Module 2: Ethics & Morality – technologist rights and responsibilities

Module 3: Someone, Somewhere Already Solved Your Problem – finding needles in the technology haystack

Module 4: Function & Design – Design-4-X/contradiction-solving/rules-for-breaing-rules

Module 5: Technology Compass – IFR/Roadmaps/Resources

Module 6: Energy – energy flow/transfer/losses/S-Fields

Module 7: Control – sensors/feedback-loops/control-system-design

Module 8: Users, Abusers & AntiFragile System Design – understanding humans and how we interact with technology

Module 9: Real-World Projects – students will work with sponsoring companies on at least five real-world challenges to deliver actual, tangible-benefit-delivering solutions.

Module 10: Dissertation – adding a new needle (‘signal’) to the global technology haystack

At the moment, we’re looking to teach it either as a stand-alone two-year degree programme, or a one-year option to help ‘re-orient’ students that have graduated from existing technology degrees to help enable them to cope in the New World we’re all now having to get used to.

Hopefully more news in the coming months. Job one: the tussle to win over a critical mass of technology educators.